Xuanwu CLI: OpenClaw on Domestic AI Chips in 5 Minutes

The AI agent revolution has arrived — and domestic hardware is no longer left behind.

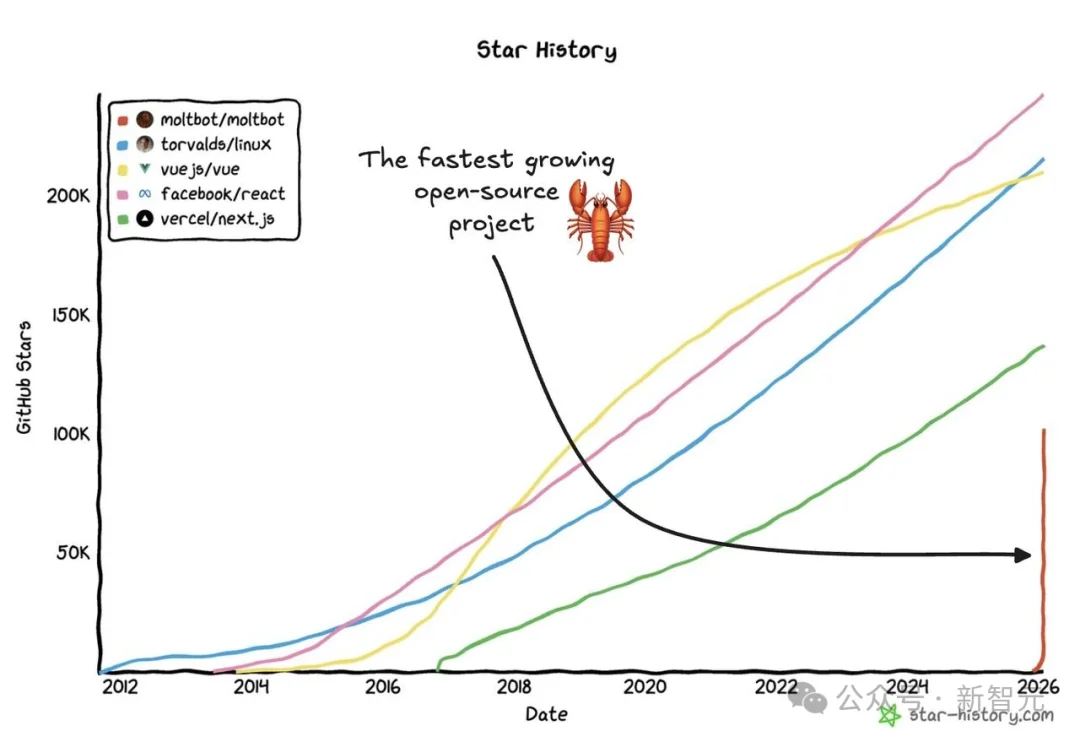

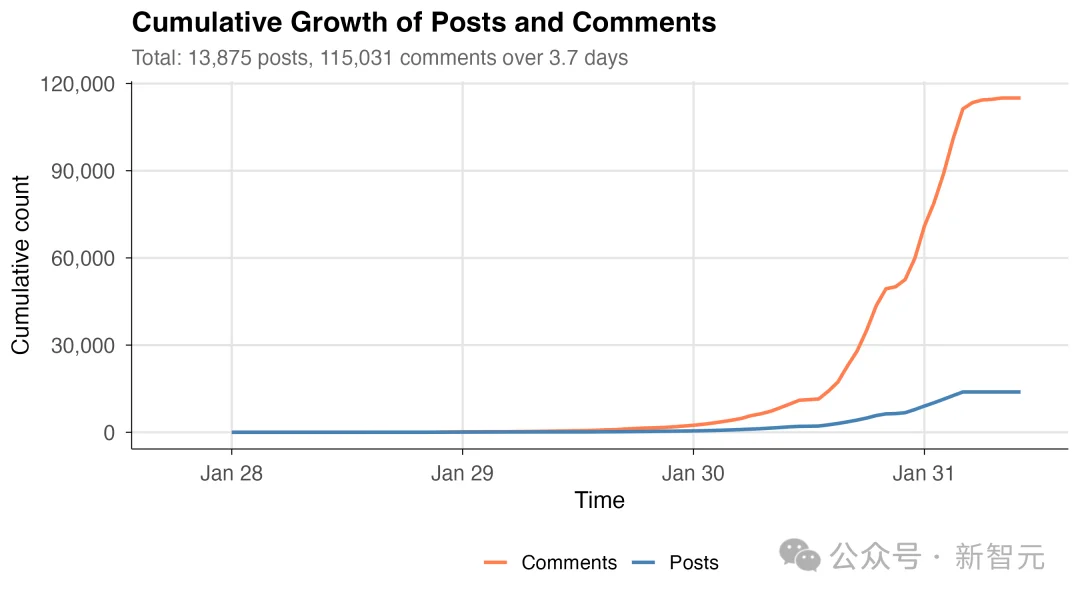

For weeks, the global AI community has been electrified by Clawdbot — an autonomous, 7×24-hour “AI Jarvis” capable of browsing, posting, commenting, and executing complex workflows. Its open-source repository (now rebranded as OpenClaw) has soared to 130,000+ GitHub stars, symbolizing a watershed moment for practical AI agents.

But there’s a critical bottleneck: cost and compatibility.

⚠️ The Hidden Cost of Cloud-Based Agents

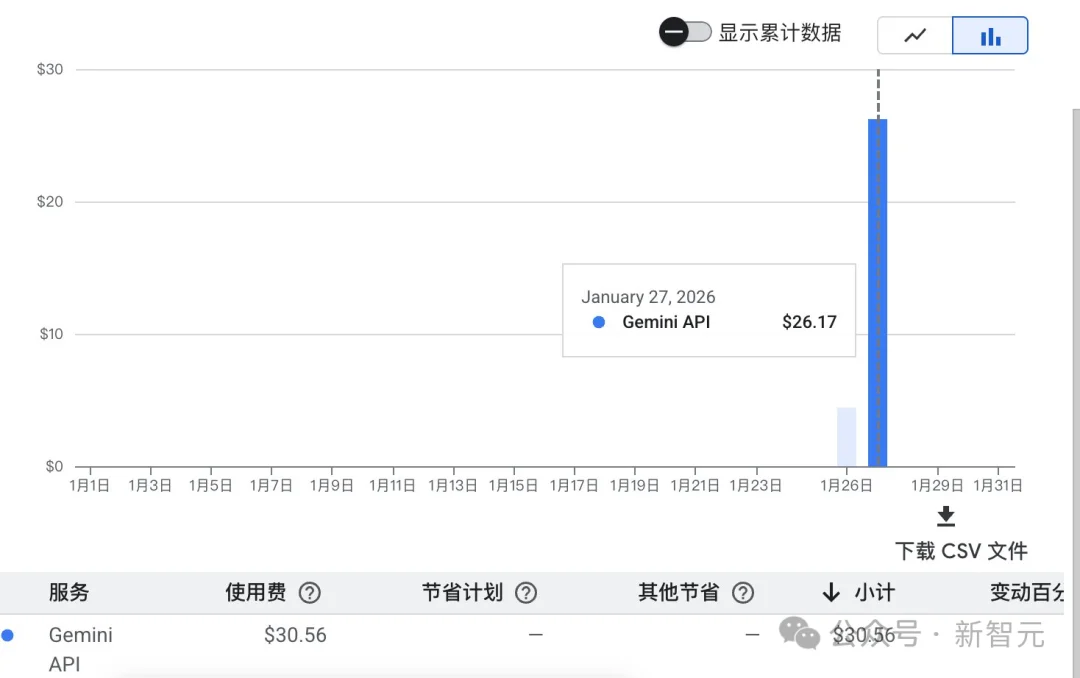

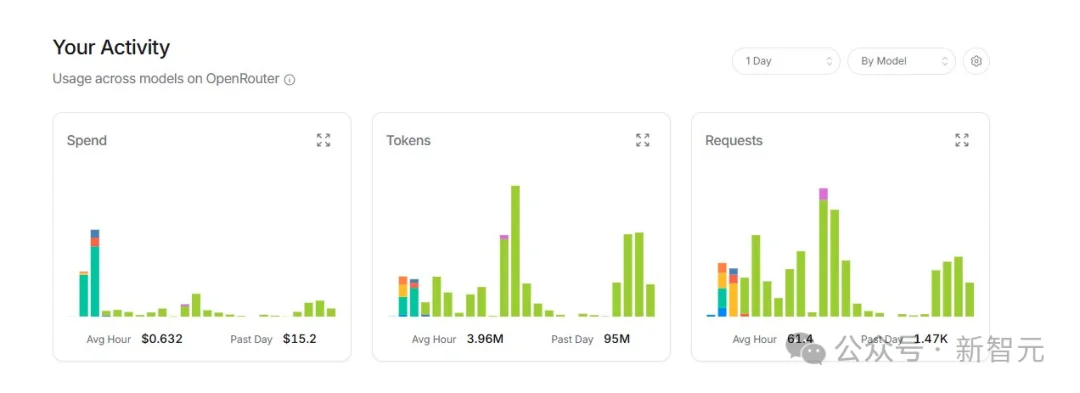

Clawdbot’s real-time operations consume massive LLM tokens — especially when deployed across platforms like Moltbook. Users report daily bills exceeding $30–$50, even with light usage:

Enter local inference: free, private, and controllable. Tools like Ollama have become the de facto standard — but only for NVIDIA CUDA and Apple Silicon. For users of Huawei Ascend, Moore Threads, MXChip, Hygon, Kunlunxin, or Photonics chips, deployment remains arduous:

- Fragmented ecosystems (CANN, MUSA, MACA, etc.)

- Manual driver installation & environment compilation

- Week-long debugging cycles

- Zero unified API or tooling

As one developer put it: “It’s not that I don’t want to support domestic chips — I just can’t get it running.”

🐉 Introducing Xuanwu CLI: The Domestic Ollama

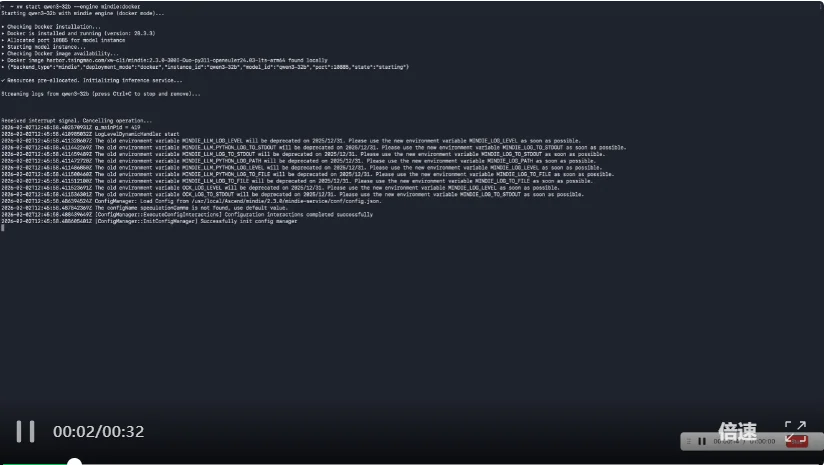

On February 2, 2026, Tsingmao AI open-sourced Xuanwu CLI — a purpose-built, zero-config CLI for deploying large language models on China’s heterogeneous AI accelerators.

✅ Key Features

| Feature | Description |

|---|---|

| ⏱️ 1-Minute Setup | Docker-based; extract-and-run. Requires only Docker + vendor drivers. |

| ⚡ Sub-30s Model Load | Optimized for ≤32B models (e.g., Qwen3-32B) — ready in seconds. |

| 🔁 Ollama-Compatible Syntax | Identical command set: xw serve, xw pull, xw run, xw list, xw ps. |

| 🔍 Auto-Hardware Detection | Detects chip architecture (Ascend, MXChip, etc.) and selects optimal inference engine automatically. |

| 🛡️ Fully Offline & Private | No internet required. All weights and data stay local — ideal for enterprise security. |

# Example workflow — identical to Ollama

xw pull qwen3-32b

xw run qwen3-32b

🤖 Powering OpenClaw on Domestic Hardware

Xuanwu CLI isn’t just a model runner — it’s the backend inference layer for AI agents.

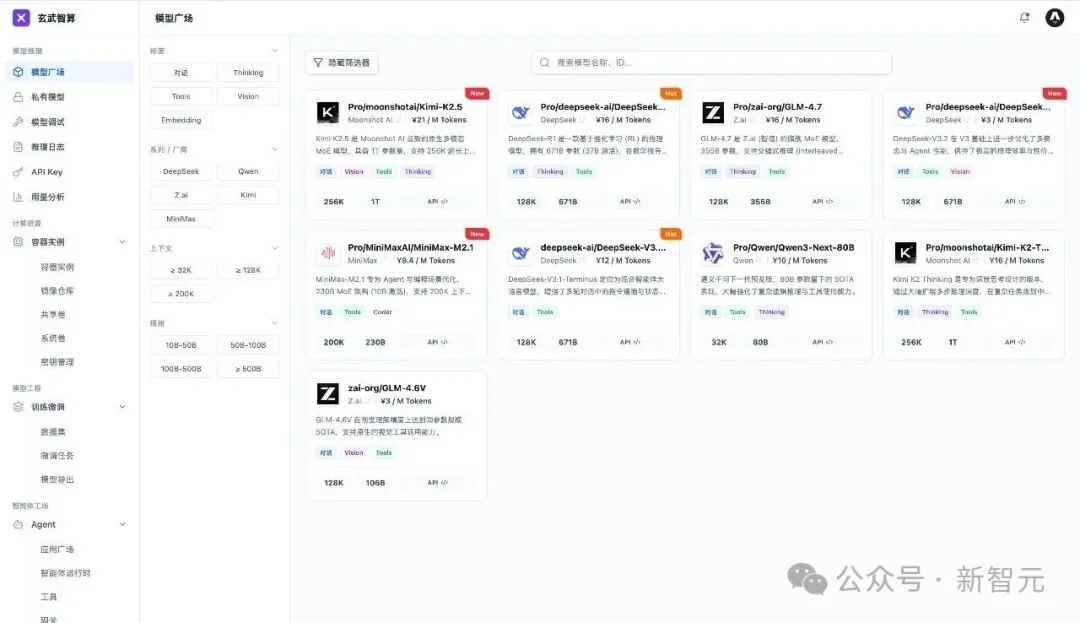

By exposing a fully OpenAI-compatible REST API, it integrates seamlessly with:

- LangChain & LlamaIndex applications

- IDE plugins (e.g., Cursor, Windsurf)

- Clawdbot / OpenClaw — now deployable natively on Huawei Ascend,沐曦 (MX), 燧原 (SOPHGO), and more.

💡 Think of it as your domestic “AI Jarvis” stack: Xuanwu CLI powers the brain, OpenClaw orchestrates the actions.

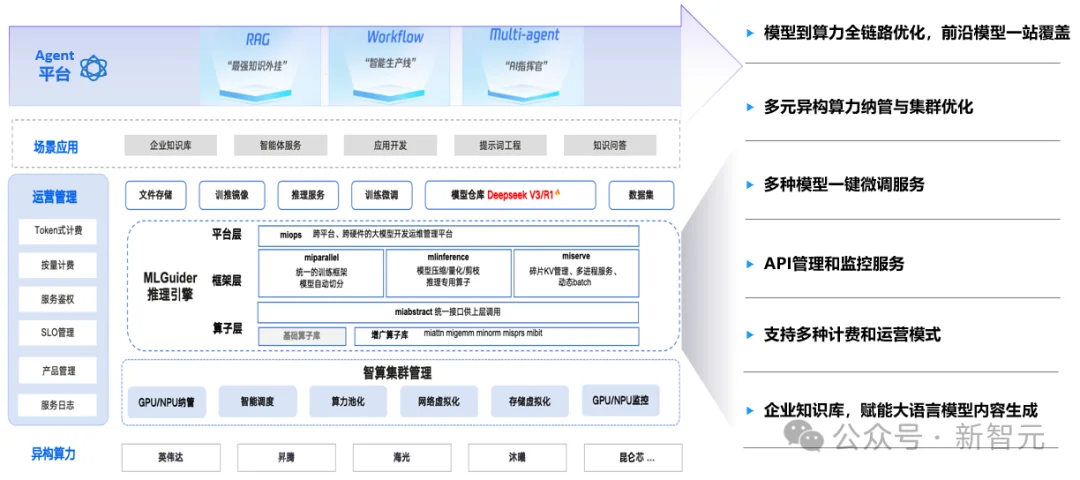

🌐 Enterprise-Ready: Xuanwu Cluster Edition

For data centers and large-scale deployments, Xuanwu Cluster Edition delivers unified orchestration across 10+ domestic chip families, including:

- Huawei Ascend

- Cambricon MLU

- Kunlunxin

- Moore Threads

- MXChip (Muxi)

- SOPHGO (Sophon)

- Hygon DCU

🔑 Core Capabilities:

- Unified heterogeneous resource scheduling (breaks vendor lock-in)

- Built-in API governance & metering/billing (supports internal chargeback & external monetization)

- Multi-engine support: MindIE (Ascend), vLLM, MLGuider (proprietary), and native frameworks

- Production-grade stability: Designed for 1000+ GPU/PIM-scale inference clusters

🌟 Why This Changes Everything

This isn’t merely another CLI tool.

It’s a strategic infrastructure layer addressing the deepest pain point in China’s AI ecosystem: ecosystem fragmentation.

“We don’t lack compute power — we lack the thread that strings the pearls together.”

Xuanwu CLI provides that thread — open, standardized, and built for interoperability. Its open-source release invites global collaboration, ensuring domestic AI chips aren’t just capable, but first-class citizens in the next wave of AI agents.

🚀 Get Started Today

- GitHub: https://github.com/TsingmaoAI/xw-cli

- GitCode: https://gitcode.com/tsingmao/xw-cli

⭐ Star it. Fork it. Submit PRs. Join the movement.

Let’s build the AI future — on our own silicon, at our own pace.

“When Xuanwu runs, thousands of AI agents awaken — not in the cloud, but on our servers, our chips, our terms.”

Article adapted from XinZhiYuan. Author: Ding Hui.