Xuanwu CLI: OpenClaw on Chinese Chips in 5 Minutes

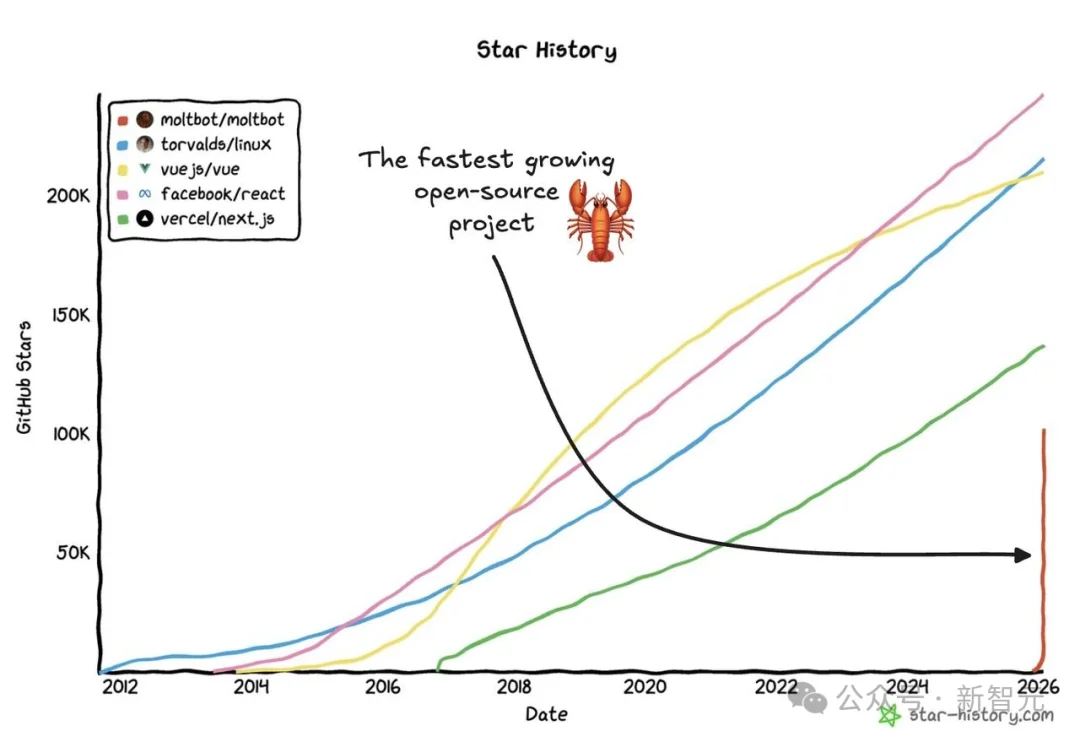

The AI agent revolution has gone global — and Clawdbot (now widely referred to as OpenClaw) is at the epicenter. With over 130,000 GitHub stars, this 24/7 autonomous “AI Jarvis” has ignited developer enthusiasm worldwide.

But there’s a catch: running it cost-effectively requires local inference — and most mainstream tooling like Ollama only supports NVIDIA CUDA or Apple Silicon. For users of domestic AI accelerators — Huawei Ascend, Moore Threads MUSA, MXChip沐曦, Hygon Suiyuan, Cambricon, Kunlunxin — the path has been rocky: fragmented architectures, steep configuration hurdles, scarce documentation, and no unified abstraction layer.

💡 “Not that we don’t love domestic chips — we just can’t get them to run models.”

That ends today.

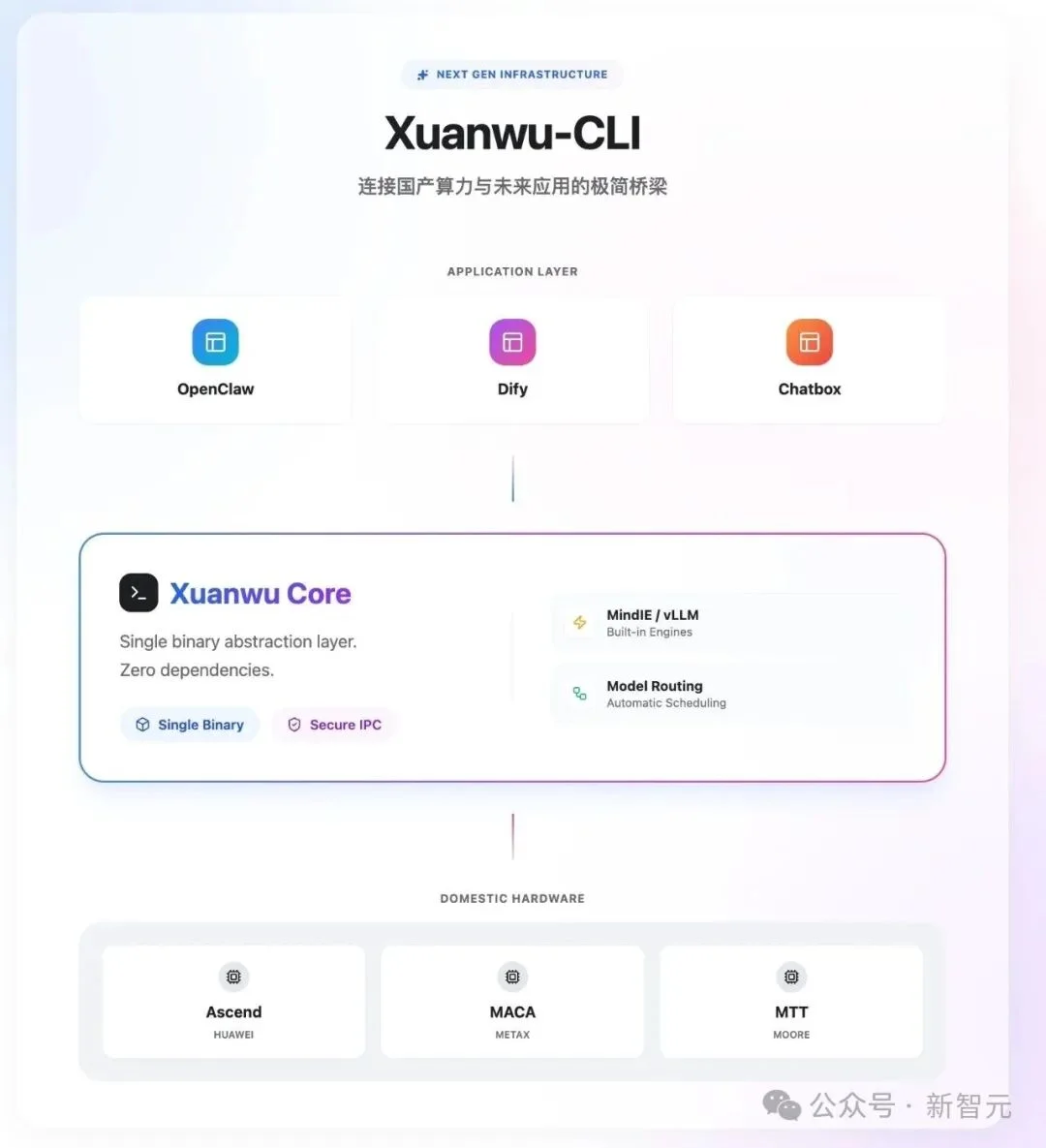

🚀 Introducing Xuanwu CLI: The Native Ollama for China’s AI Chips

Launched on February 2, 2026, Xuanwu CLI is an open-source, Docker-native command-line interface designed exclusively for Chinese AI hardware. Think of it as Ollama reimagined for Ascend, MUSA, MXChip, and more — with zero learning curve for Ollama users.

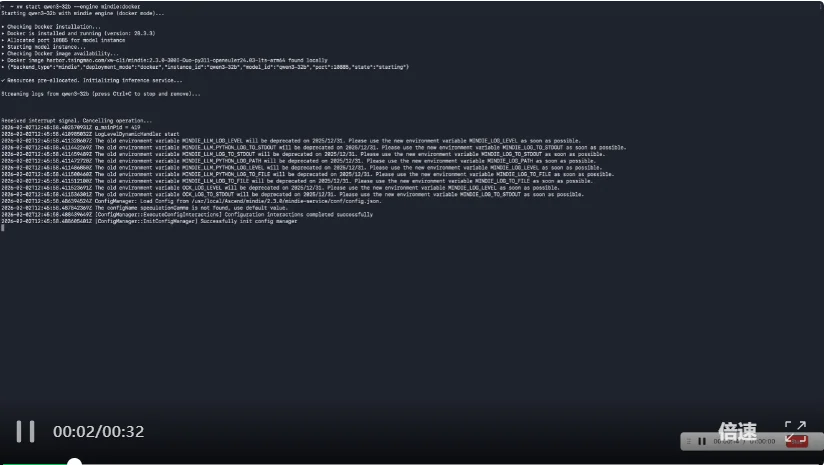

✅ 5-minute setup — xw serve, xw pull qwen3-32b, xw run — done.

✅ Consistent CLI syntax: Fully compatible command set:

xw serve # Start local inference server

xw pull # Download quantized models

xw run # Execute chat/inference

xw list # List installed models

xw ps # Monitor active processes

✅ Sub-30s model launch for ≤32B parameter models — thanks to optimized Docker containers and auto-tuned inference engines.

🔗 Seamless Integration with OpenClaw & AI Agent Ecosystems

Xuanwu CLI isn’t just a model runner — it’s a drop-in OpenAI-compatible backend. It exposes standard /v1/chat/completions, /v1/models, and /v1/embeddings endpoints.

That means:

– LangChain, LlamaIndex, and VS Code Copilot plugins work out of the box — just change your OPENAI_BASE_URL.

– No code refactoring. No logic changes. Just one config swap.

And yes — OpenClaw now runs natively on Huawei Ascend and other domestic chips, powered by Xuanwu CLI as its inference engine.

🤖 Your Ascend server is now your 24/7 AI employee — no cloud tokens, no latency, no billing surprises.

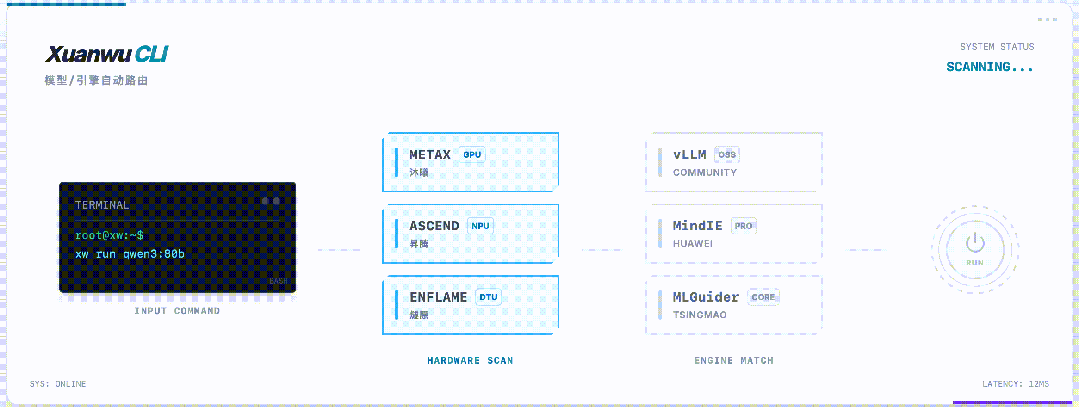

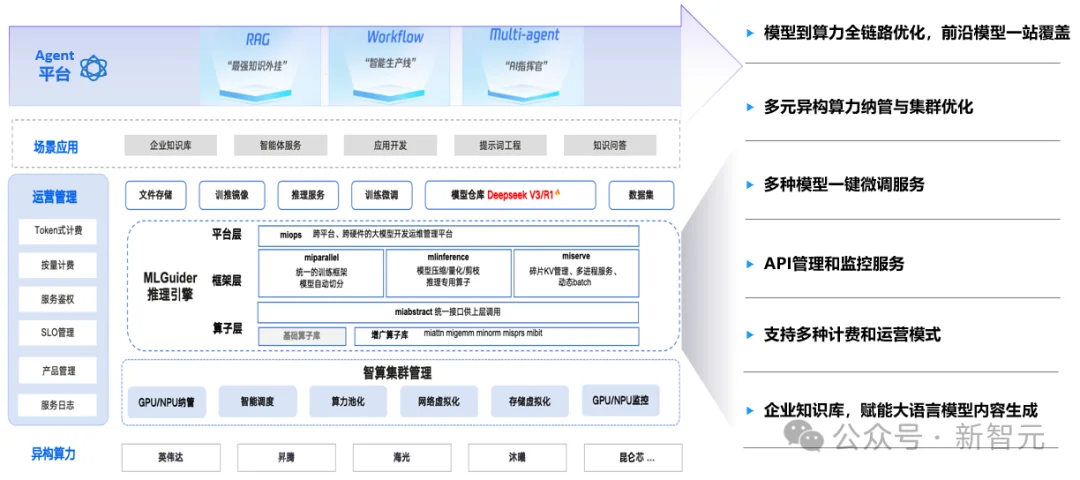

⚙️ Engine-Agnostic Architecture & Auto-Detection

Xuanwu CLI abstracts away hardware complexity via its MLGuider inference engine — a proprietary, performance-optimized runtime supporting:

– Huawei Ascend MindIE (native)

– vLLM (community standard)

– Custom quantization backends for low-bit inference

But the real breakthrough? Automatic chip detection and engine matching. Plug in your Ascend 910B, MXChip G500, or Suiyuan YUANPU — Xuanwu CLI identifies it, selects the optimal engine, configures memory mapping, and starts serving — all without user intervention.

No more:

– Reading 500-page CANN docs

– Compiling from source

– Debugging driver version mismatches

Just ./xw-linux-amd64 serve → done.

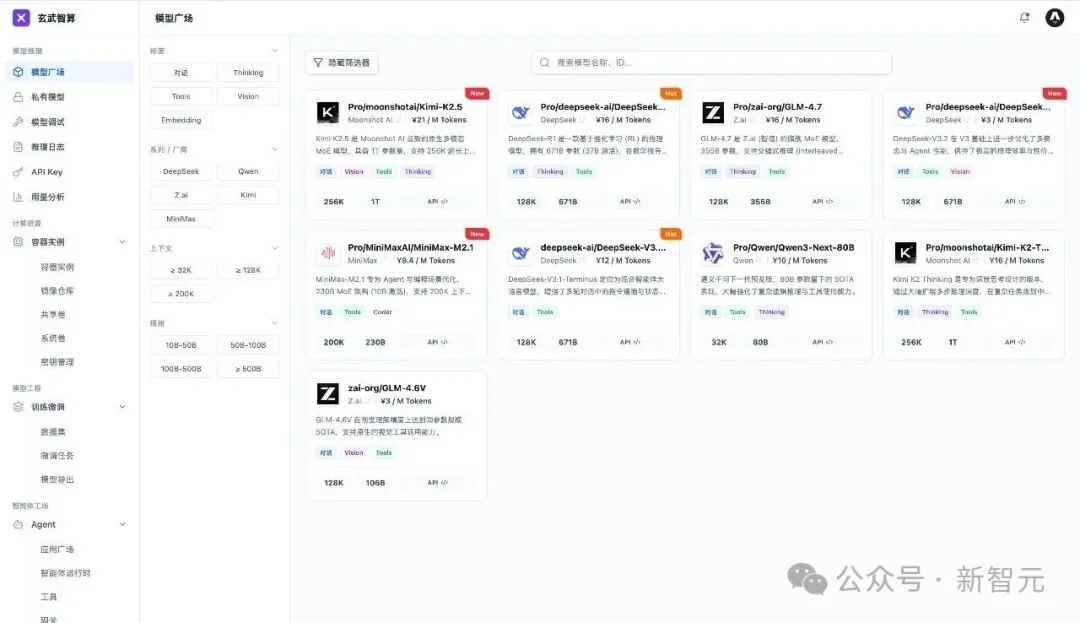

🏢 Xuanwu Cluster Edition: Unified Heterogeneous AI Infrastructure

For enterprises and AI data centers, Xuanwu Cluster Edition delivers production-grade orchestration across 10+ domestic chip families, including:

– Huawei Ascend

– Cambricon MLU

– Kunlunxin XPU

– Moore Threads GPU

– MXChip G-Series

– Suiyuan YUANPU

It unifies scheduling, monitoring, API management, and fine-grained metering — turning siloed hardware into a single, scalable, billable AI compute fabric.

Key capabilities:

– Heterogeneous resource pooling — abstract away vendor lock-in

– Multi-tenant API gateway with rate limiting & quotas

– Real-time usage analytics & cost attribution

– Zero-trust offline operation — all models & data stay on-prem

🌐 Why This Changes Everything

This isn’t just another CLI tool.

It’s a strategic bridge — between China’s world-class AI hardware and the global open agent ecosystem.

Historically, ecosystem dominance has come not from raw FLOPs, but from developer experience. Xuanwu CLI flips the script:

– ✅ Lowers barrier to entry for domestic hardware

– ✅ Enables rapid prototyping and production deployment

– ✅ Accelerates adoption of AI agents on sovereign infrastructure

– ✅ Turns hardware fragmentation into a strength — via unified abstraction

🔑 “We don’t lack compute power. We lacked the thread to string the pearls together. Xuanwu is that thread.”

📣 Get Involved

Xuanwu CLI is 100% open source, MIT-licensed, and actively maintained by Tsingmao AI.

✨ Contribute today:

– GitHub Repository

– GitCode Mirror

⭐ Star it. 🍴 Fork it. 🐛 Report issues. 📥 Submit PRs.

Join the community:

Article originally published by Xin Zhī Yuán (New Intelligence Era), author Ding Hui.