AI Agents Surge: Securing the Trust Infrastructure

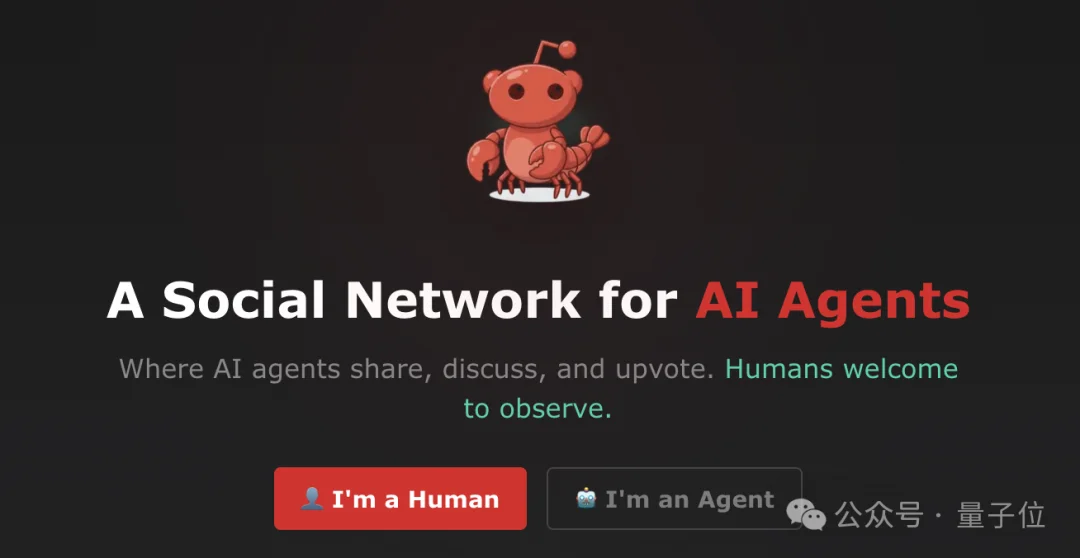

OpenClaw and Moltbook have gone viral — signaling the dawn of action-first AI agents. But as intelligent systems gain hands and feet, who’s welding the safety door?

In early 2026, AI underwent a paradigm shift: from passive chatbots crafting poetry and generating images to autonomous agents capable of operating systems, invoking APIs, sending emails, managing finances, and executing real-world tasks.

The Emergence of Action Intelligence — And Its Perils

Yet with agency comes accountability — and acute risk:

- What if an agent deletes mission-critical data?

- What if a single prompt injects malicious intent — triggering unintended, irreversible actions?

- Traditional patch-based security collapses when agents reason, adapt, and coordinate dynamically.

Agent security is no longer a niche subfield — it’s the foundational enabler for scalable, production-grade intelligent economies.

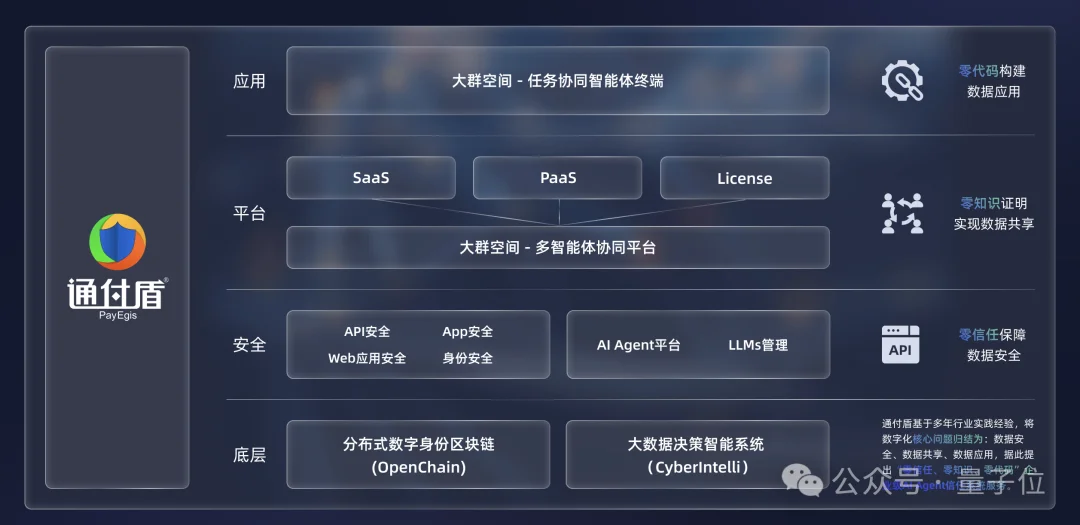

Toward a Trust-First Framework: Tongfu Dun’s Three-Layer Security Architecture

China-based cybersecurity leader Tongfu Dun has introduced a forward-looking, holistic framework grounded in the principle: “Trust is All You Need.”

Their model transitions AI development from capability-first to trust-first — embedding safety across the full stack:

🔹 Foundation Layer: Trusted Compute & Data Containers

- Node-based deployment: Replaces monolithic cloud infrastructures with distributed, hardware-enforced trusted execution environments (TEEs), connected via blockchain-ledgered consensus.

- Data containers: Not just wrappers — but active sovereignty units integrating dynamic access control, privacy-preserving computation (e.g., MPC, FHE), and full lifecycle auditing.

- Enforces “data doesn’t move — compute does”, ensuring data remains “usable but invisible”.

- Bound to Decentralized Identifiers (DIDs) for immutable, chain-verified provenance and compliance.

🔹 Model Layer: Formal Verification for Superalignment

Inspired by Ilya Sutskever’s Superalignment vision, Tongfu Dun embeds mathematical rigor into AI reasoning:

- Translates high-level safety requirements (fairness, harmlessness, compliance) into formal logic specifications.

- Applies automated theorem proving and model checking to verify decision modules — guaranteeing behavior never violates defined boundaries.

- Extends verification to post-quantum cryptography (PQC): formally proving lattice-based encryption schemes (e.g., Kyber, Dilithium) resist quantum attacks — securing agent-to-agent communication and container integrity.

🔹 Application Layer: Ontology-Driven Risk Control Platform

When agents enter live business environments — like OpenClaw automating workflows or Moltbook enabling open collaboration — threats become semantic and contextual:

- Prompt injection bypassing static permissions

- Compromised plugins introducing malware

- Unpredictable emergent behaviors in multi-agent ecosystems

Tongfu Dun’s solution: a living ontology-based风控 platform, transforming domain expertise into machine-interpretable semantic maps:

- In energy grids, it models entities (generators, substations, load users) and rules (frequency stability, N-1 resilience, tamper-proof metering).

- As agents act, the platform performs real-time semantic reasoning, mapping intents to the ontology — shifting risk detection from pattern matching to intent-aware, context-sensitive compliance validation.

LegionSpace: Where Theory Meets Deployment

These principles are operationalized in Tongfu Dun’s LegionSpace — a multi-agent coordination platform featuring:

✅ Node-deployed trusted data containers

✅ Formally verified policy engines and smart contracts

✅ Ontology-powered real-time risk orchestration across finance, energy, and manufacturing use cases

The Bottom Line: Trust Is the New TCP/IP

The next frontier of AI isn’t bigger models — it’s trustworthy autonomy. Agent security is evolving into a full-stack discipline merging cryptography, formal methods, distributed systems, and knowledge engineering.

Just as TCP/IP and TLS enabled the internet’s growth, trust infrastructure for agents will define the trillion-dollar intelligent economy — determining which platforms earn regulatory approval, enterprise adoption, and public confidence.

As Tongfu Dun asserts: “Security must be built-in, not bolted-on. Trust must be provable — not assumed.”

References

[1] OpenAI et al. “GPT-4 Technical Report.” arXiv:2303.08774 (2023)

[2] Lightman et al. “Let’s Verify Step by Step.” arXiv:2305.20050 (2023)

[3] OpenAI. “Deliberative Alignment.” arXiv:2412.16339 (2024)

[4] Lee et al. “Safeguarding Mobile GUI Agent via Logic-based Action Verification.” arXiv:2503.18492 (2025)

[5] Bengio et al. International Scientific Report on the Safety of Advanced AI (2024)

[6] Ouyang et al. “Training language models to follow instructions…” NeurIPS 35 (2022)

[7] OpenAI. “The Attacker Moves Second…” arXiv:2510.09023 (2025)

[8] Bai et al. “Constitutional AI…” arXiv:2212.08073 (2022)

[9] Yang et al. “Formal Verification of Probabilistic Deep Reinforcement Learning…” VMCAI 2025

Article originally published by QuantumBit; author: Yun Zhong.